Mindful is a tool designed to help individuals document harassment and abuse on social media, giving targets of online harassment greater agency and support in how they respond. By making it easier to capture and organize evidence of online abuse, Mindful empowers users to protect their psychological well-being and physical safety while contributing to a broader understanding of digital harms.

Vision

To enable everyone to participate online without fear of harassment, by providing tools that not only support those experiencing abuse but also generate insights that push for stronger protections, healthier online communities, and a safer internet for all.

Goals

The project aims to

- Empower individuals facing online harassment by giving them greater agency to document and respond to attacks on social media, beginning with X (formerly Twitter)

- Simplify evidence collection by providing easy-to-use tools for capturing, organizing, and securely storing records of harassment and abuse

- Support psychological and physical safety by connecting users with resources, strategies, and community-driven support networks to reduce harm

- Advance research and advocacy by building a crowdsourced dataset on online abuse that can inform policy, strengthen platform accountability, and promote a safer digital environment.

Why This Matters

41% of Americans have experienced online abuse. Harassment disproportionately affects people of color, LGBTQ+ folks, and other marginalized groups, as well as women in professions like journalism, activism, and politics. As social media platforms loosen content moderation policies and pull resources from Trust & Safety teams, experts expect abuse to increase. For folks experiencing harassment, the first step towards receiving support is providing evidence of what happened.

But the evidence collection process is painful and laborious: users must scroll through hundreds of abusive posts, screenshotting and compiling evidence. Researchers and domain experts have proposed countless interventions and design choices to better protect targets of harassment, including frequent calls for better documentation support. Yet limited tooling exists to help users document and combat online abuse without costly Platform APIs (Pew Research Center, 2022). Tools that once helped have been shuttered or redirected because of the prohibitive cost of platform API access. Moreover, in a world where much online abuse is not prosecutable by law, and content moderation policies permit transphobic hate speech, many users may feel there’s nothing they can do with their abuse reports, disincentivizing the documentation and reporting of harassment and contributing to a lack of public knowledge about the scale and nature of social media abuse.

How It Works

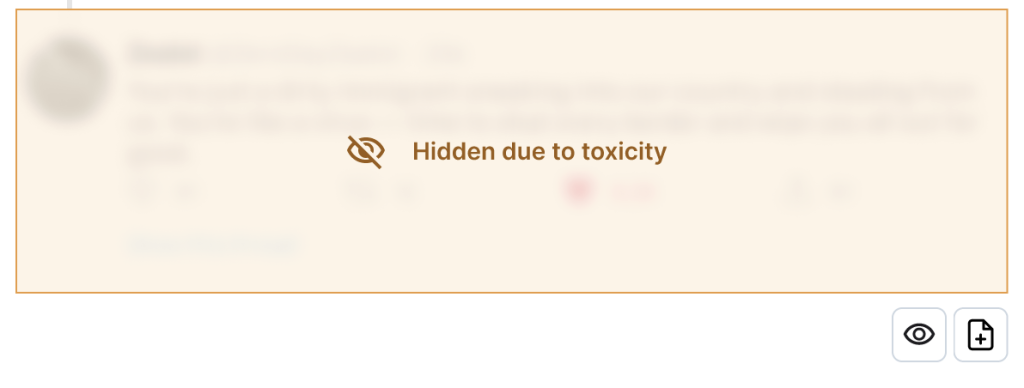

Mindful will use a combination of keyword- and AI-based detection to identify, blur, and document harassing content—allowing people experiencing online abuse to gather evidence without being forced to re-live the harassment.

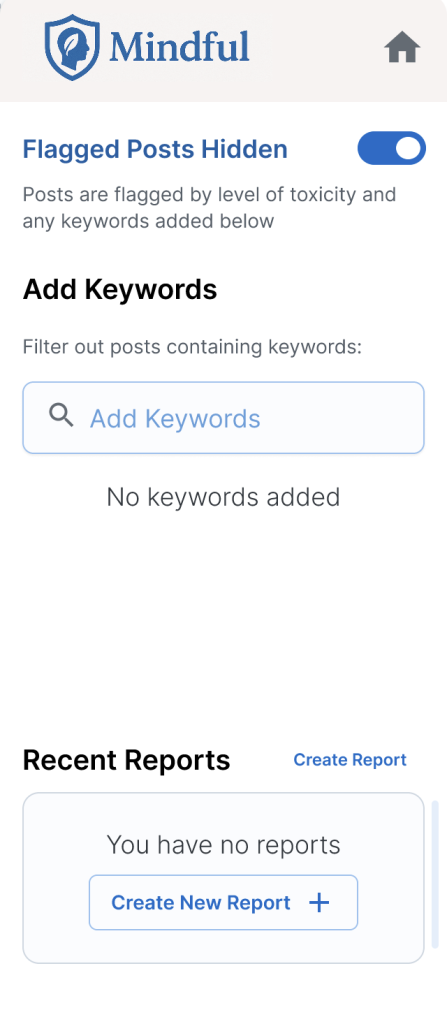

Automated and Customized Detection

Mindful will use AI to take an initial pass at detecting and flagging possible harassment. Because AI detection alone has limitations, users will also be able to add custom keywords that existing moderation systems may overlook. Posts flagged as harassment will be automatically blurred from view, with the option to reveal or re-hide them as needed.

Easy Evidence Capture with Screenshots

Since compiling evidence is often the first step in seeking support—from platforms, employers, or law enforcement—Mindful will include a one-click screenshot tool. Users will be able to quickly capture and save any hidden post, either to their device or directly into a case report.

Report Creation and Management

Mindful will allow users to organize their evidence into structured reports that can be shared with trusted support networks, employers, social media platforms, legal representatives, or authorities. These reports will streamline the process of documenting harassment and make it easier for users to access the help and accountability they need.

Who Can Benefit

We are developing this tool to serve a wide range of individuals and communities who are disproportionately affected by online harassment and abuse, including

- Journalists, especially those from marginalized communities, who face targeted harassment for their reporting and public presence

- Activists and advocates working on social, political, and environmental issues who are often attacked for speaking out

- Victims of cyberbullying, including young people and adults navigating harmful online behavior

- LGBTQ+ individuals experiencing sexual- and gender-based harassment or violence in digital spaces

- Election workers and civic leaders who are increasingly targeted with online abuse that threatens democratic participation

- Everyday social media users who encounter harassment, abuse, or intimidation and need tools to protect themselves and respond safely

Get Involved

Know folks who might need this tool and/or with expertise managing online harassment? We would love to meet them! Reach out to asml@cyber.harvard.edu.

News

Team

Teagan D’Addeo, Research Assistant

Zach Deocadiz, Research Assistant