LLM Engine is an extensible backend service for creating, experimenting with, and deploying interactive large language model (LLM) agents across a variety of social contexts. The project focuses on developing LLM-powered discussion bots that make group conversations more meaningful, inclusive, and effective. Designed to support multimodal, intentional conversations in online, hybrid, and in-person settings, it is built for use at live events, in classrooms, and within other collaborative environments. By bringing together event conveners, educators, researchers, and developers, LLM Engine explores how AI can foster healthier human interaction and transform digital discourse.

Vision

To set new standards for how LLMs can be responsibly used to foster dialogue and build a healthier internet through a number of supported and widely used conversational platforms.

Goals

This project is focused on supporting healthier human interaction by

- Curating a collection of LLM discussion bots that are thoughtfully designed, evaluated, and easy for event organizers to deploy in their programs

- Releasing an experimental open-source laboratory where researchers and developers can create, test, and improve additional discussion bots

- Developing benchmarking techniques to measure how large language models enhance multi-user, multi-turn interactions

- Engaging with users, researchers, and developers to advance public-interest experimentation and innovation in AI-powered discourse.

Why This Matters

LLM Engine is intended for conversation organizers and moderators who wish to facilitate healthier discourse, particularly in an academic setting, as well as those building agent-based solutions for them. Our framework is extensible enough to support a growing number of platforms, agent types and discursive goals. We are currently most focused on using LLMs to promote the inclusion of diverse perspectives by supporting a variety of communication styles, resolving “pluralistic ignorance” in group settings, and enhancing conversation quality by surfacing novel information and overlooked perspectives.

How It Works

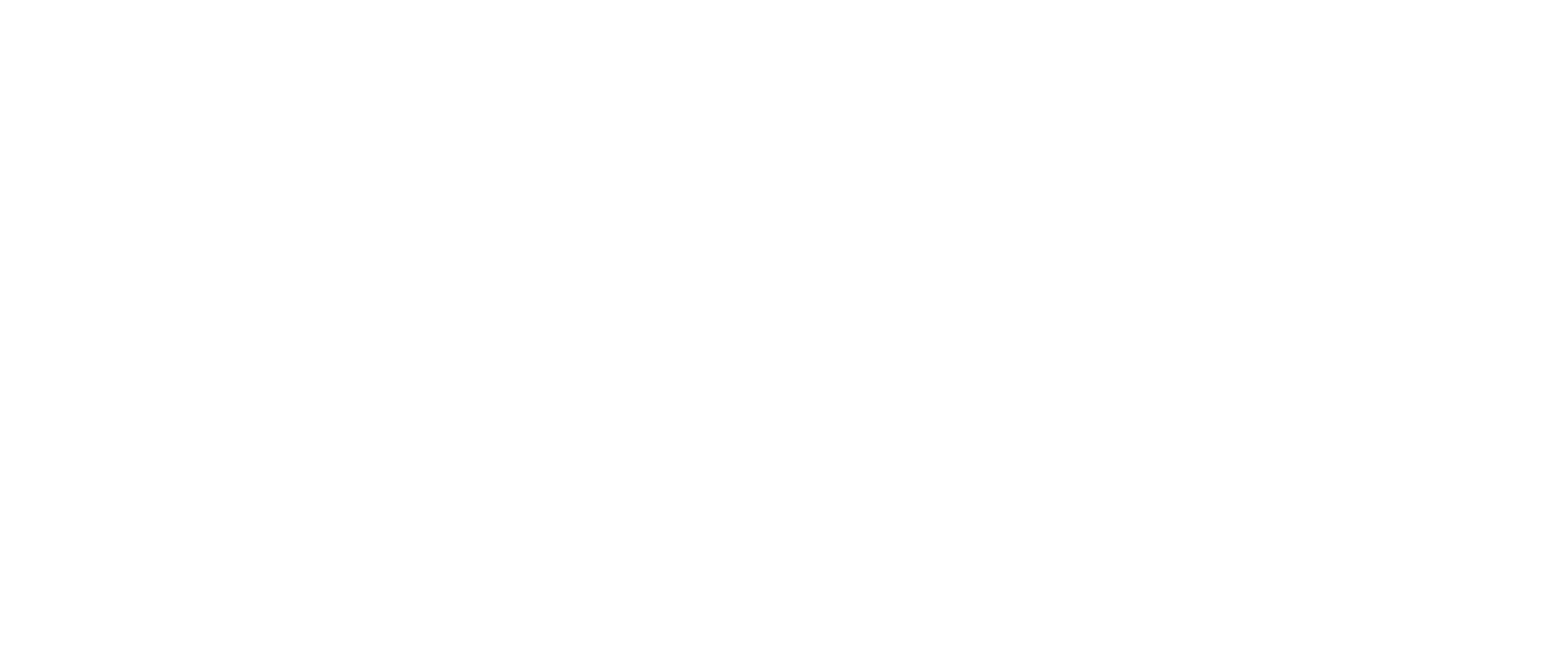

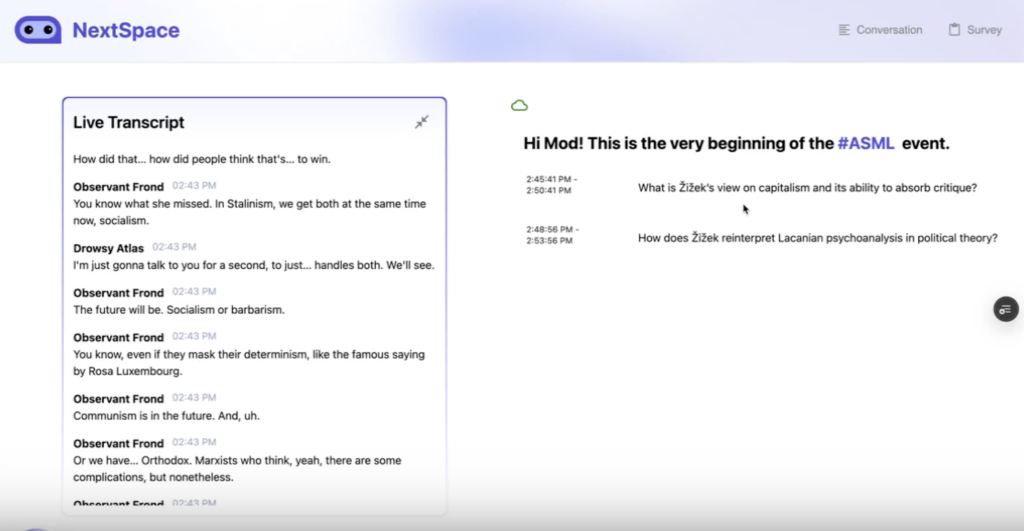

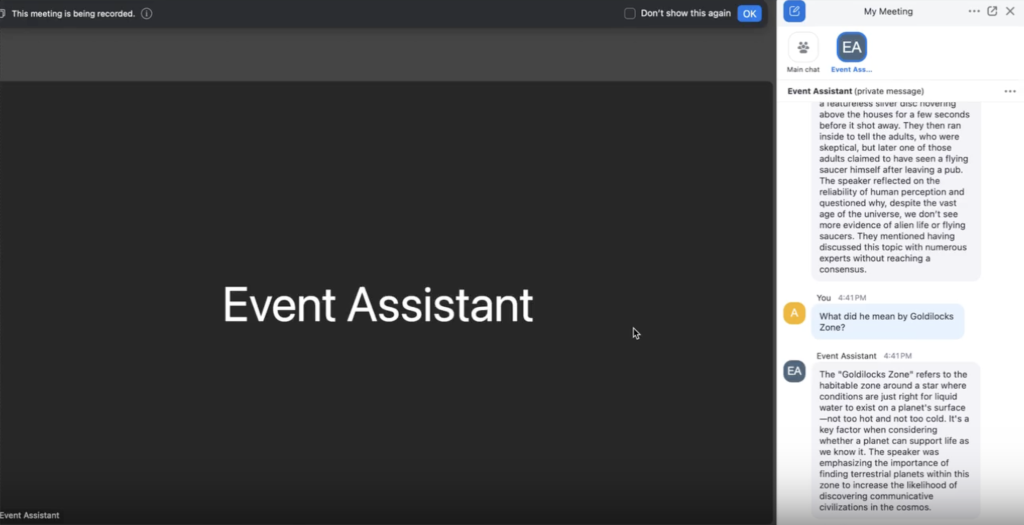

LLM Engine is a backend service that provides APIs for injecting agents into multi-user conversations on a variety of platforms, including NextSpace, Nymspace, Slack, and Zoom. LLM Engine hosts multimodal agents to enrich all stages of a conversation.

- A variety of agents that can be used out-of-the-box, including agents that can promote civility, reflection, playfulness, and provide real-time support for event moderators and participants.

- APIs to configure agents at creation or runtime with a variety of options, including changing prompts, models, RAG materials, timing intervals, and message processing thresholds

- Real-time transcription for virtual and hybrid events so agents have full conversational context

- Centralized storage of conversations and transcripts across multiple platforms in a single conversation and for post-event review and analysis

Who Can Benefit

LLM Engine is designed to serve a diverse range of audiences, including

- Event conveners and educators who want to leverage technology to create more meaningful, inclusive, and engaging discussions

- Platform developers seeking to integrate LLM-powered facilitation tools into their systems and applications

- Researchers exploring the dynamics, impact, and potential of LLM-mediated conversations

Get Involved

We’re looking for collaborators, test partners, and ideas for feature ideas and requirements. If you’re interested in experimenting with LLM discourse agents or shaping their development, we’d love to hear from you at asml@cyber.harvard.edu.